What We Take For Granted

This is the lightning(-ish) talk I gave at TigerBeetle 1000x World Tour Belgrade, transcribed to article form. Huge thanks to Ludwig for hosting the event and the TigerBeetle folks for setting it up.

The slides are available in PDF format as well.

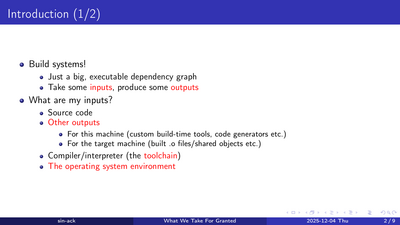

Build systems! They are basically big, executable dependency graphs, and at each node they take in some inputs and produce some outputs. Now, what can those inputs be? You have the source code, of course. You have other outputs produced by the nodes in the graph that this node depends on, and those outputs can be either for the machine the build is being performed on, in which case they would be build-time tools such as asset pre-processors and source-code generators; or, they can be for the machine the artifacts will be executed on, in which case they are just previous object files, libraries, headers and so on (some pre-built, some built-on-demand). Additionally, the compiler or interpreter (depending on the language we’re using) is an input as well, since they are what we’re using to produce the artifact we’re after. And finally, even though we don’t really think about it that much, the operating system environment we run against is an input too, as we will see.

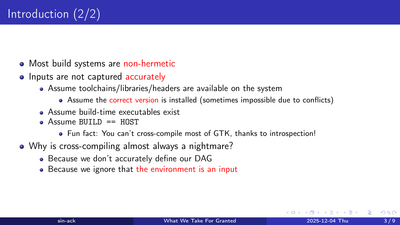

Most build systems are non-hermetic. What this means is that not all of the inputs they are used to produce an output are captured accurately in the graph. For one, we assume that the toolchain we use to produce the output, the libraries we link against, the headers we include etc. are present on the system; furthermore, we assume that the correct version of those libraries are installed (which is not always possible, as we may have other software present on the machine that expects a different, incompatible version of the library). We assume that executables we use during build-time exist on the host system (while this is usually true, when it matters the most it may not be! More on this later). Sometimes, we also assume that the system we’re building on is the system we’re building for, so we try to run executables that we build without respecting cross-compilation1.

More generally, why is cross-compiling almost always a nightmare? I would argue that it’s because we don’t accurately define our build graph, and more specifically, we forget that the operating system environment is an input2.

Now, let’s take a look at some things we take for granted instead of accounting for in our build systems.

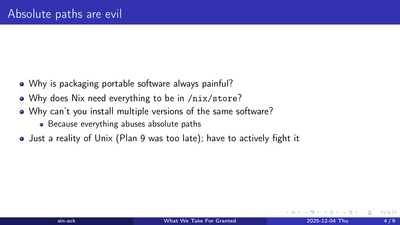

Absolute paths are evil

Why is it always so painful to package portable software?

Why does Nix require you to put everything in /nix/store, and otherwise you

cannot use any substitutes?

Why can’t you install multiple versions of the same application or library, unless the package manager has provisioned for it specifically?

The answer to all of these questions is because we rely on absolute paths; or more specifically, we rely on absolute paths within a global namespace. This is one of those fundamental mistakes of Unix we just have to live with. While Plan 9 wasn’t too little (it had some pretty cool ideas3), it was certainly too late and Unix had become the standard by the time it was released. Now all we can really do is to actively ensure we don’t rely on the global namespace as much as possible.

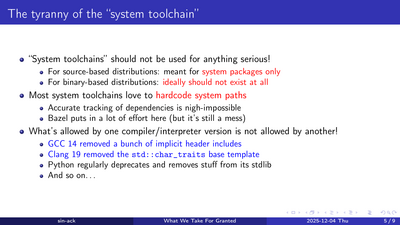

The tyranny of the “system toolchain”

The “system toolchain” is what you get when you ask your package manager to give you a C compiler or Python interpreter. I argue that these should not be used for anything serious. In Linux distributions in particular, you have two options with system toolchains:

- For source-based distributions, where the system toolchain is used to build the packages that are installed to the system directly (perhaps with some customization), they should only be used for system packages.

- For binary-based distributions where packages are compiled ahead-of-time for you, they should ideally not be used at all, or only used for quick-and-dirty testing.

The main problem with system toolchains is that they love to hardcode and use system paths by default, which are usually pointing to libraries that are specific to this system. One has to go out of their way to disable this, and if they don’t, accurate handling of dependencies becomes nigh-impossible4. Furthermore, both compilers and interpreters break things from version to version, and relying on the system compiler becomes very error-prone. Just some examples:

- GCC 14 removed a bunch of implicit header

includes,

breaking any code that relied on some stuff like

<cstdint>types being available through other headers. - Clang 19 removed the

std::char_traitsbase template, which broke large projects like Facebook’s Folly. - Many interpreted languages like Python routinely deprecate and remove stuff from the standard library, which breaks code because the standard library is tied to the runtime.

My tar is not your tar!

When we say tar, we are usually talking about GNU

tar which is the implementation shipping

with most Linux distributions. However, this assumption breaks down as soon as

we want to package something on macOS or on BSDs which do not use GNU tar.

Just as a silly comparison:

- The

bsdtarman page taken from FreeBSD, when metadata is removed, is around 6000 words. - The GNU

tarTexinfo documentation, when navigation sections and other metadata is removed, is 90000 words and weighs 1.3MB in HTML format.

Even with this, bsdtar includes features that GNU tar does not, such as

mtree which allows specifying the attributes of each file to be placed in a

tar archive explicitly using a metadata file.

I’m giving an example off of tar here, but this isn’t just limited to tar:

any tool you run should be controlled by your build system so that you can

ensure reproducibility.

At least coreutils are guaranteed, right…?

Well, you might say that since tar is a tool with multiple diverging

implementations, you can expect incompatible behavior here. But surely, at least

we can rely on the shell and coreutils, right?

macOS didn’t ship the realpath command until Ventura in

2022. This was a source of breakage

in many shell scripts which expect to use realpath to figure out their own

location and to reference paths relative to the script location in commands.

BSDs and macOS won’t ship with recent versions (4.0+, released in 2009!) of Bash, or simply do not ship Bash at all, due to licensing concerns (philosophical and/or legal).

Let’s not even get into Windows, which is its own separate world where you cannot redistribute system tools even if you wanted to.

In fact, even Bazel makes the same mistake here: genrule targets, which are

how you define targets that run one-off scripts, do not declare a dependency on

the shell they’re run under, or on the tools they run, which breaks running any

genrule or shell binary in the Linux hermetic sandbox.

More generally, if you don’t include your tools in your build graph, your build graph is not accurate.5

Now, if you’ve dabbled in build systems for a bit, there’s an obvious question here: “Why not just use Nix for fixing these things?” And Nix does work great, if you are using it strictly as a hermetic build tool. Unfortunately, the DX is pretty awful:

- Running any command becomes painfully slow very quickly, as evaluation time

dwarfs build/run times. For even small Rust projects I’ve had each

nix build/nix runhang for 5-10 seconds before anything would be built. - Nix generally works at package granularity, not at file/module granularity6.

This means that even for small changes we usually cannot benefit from

incremental builds.

nix shellis a way to work around this, but that’s only really viable for simple builds where the build system being wrapped by Nix is able to generate the final artifact we’re working with on its own (which isn’t the case, for example, when building things like OCI images). - Fixing things when they break is usually done by fiddling with things until they start working again. In general, since we are working with a fully-fledged functional programming language, the error messages are more generic than something more specialized for build systems, and it can get confusing very fast.

- A particular pet peeve of mine: many actions are uncancellable once started.

If I evaluate the wrong thing I will wait for 10 minutes while the REPL

evaluates every package in Nixpkgs. Or, if I run

nix buildand change my mind, I can’t cancel the build in any way; I must manually terminate the command.

So, in light of all we’ve talked about, what do I recommend if you want to stop taking things for granted, and to build software that runs on many systems? Well, first of all, please use a hermetic build system. It has a higher up-front cost, because it makes you answer some questions that you previously didn’t have to think about, such as “how do I obtain a compiler?” and “how do I obtain a version of the library for a given target system?” However, these are questions that you will have to answer for anything non-trivial anyway, so this ends up paying off in the end.

My personal recommendation, if you want to focus on DX while keeping things mostly hermetic, is to use Bazel with a proper toolchain setup7. Bazel is not perfectly hermetic like Nix is8, but it has much superior DX and IDEs are starting to gain native support for it.

Second, if you’re building portable software, assume least from the system

you’re running under. Ideally, your software package should only depend on the

system interpreter and libc9, and perhaps vendor libraries like libGL

(since these cannot be shipped ahead-of-time generically). Your RUNPATH values

should be set up relatively, using $ORIGIN, so you don’t accidentally go off

trying to pick things up from the system. But also, don’t forget about system

integration. For hermetic build systems this means building against a sysroot

and/or using the SONAMEs of libraries instead of their paths/names when

linking10.

And since we’re at a TigerBeetle conference, and we presumably have a lot of Zig

users here, what about zig build? I’d say zig build is pretty good for what

it does, which is building Zig projects (with a side of C/C++). For Zig code

specifically, if you pin the compiler version you’ve got a lot of hermeticity

already: dependencies are specified with explicit hashes and built with a known

compiler version, so your build-time tools built with Zig are accurately

represented in the graph too. For third-party libraries, All Your

Codebase is a collection of popular

libraries packaged to be linked against directly using zig build. In general,

if you’re building Zig/C/C++ code and have minimal use of linkSystemLibrary

outside of system integration, zig build is fine; but once you step outside

these boundaries I’d recommend the above instead.

And more generally, please Care about your build systems. Thank you.

-

Fun fact: You cannot cross-compile GTK! The

g-ir-scannertool, part ofgobject-introspection, loads in shared objects compiled for the target machine to generate introspection data, and it’s run at build-time which means your target system must be the same as your build system. I raised this issue a while back in the GTK Matrix and got a response that amounts to “working as intended” 🙃 ↩︎ -

An obvious question is “but why don’t we define our build graph accurately?” There are several different factors here:

- The defaults of our tools assume ambient dependencies by default rather than force us to answer this question, so we just don’t think about it.

- “Standard” build systems like Make are not adequately equipped to set this up without significant required scaffolding.

- Language-specific tooling usually isn’t precise enough to describe how to build things like native extensions (which is why I advocate for polyglot build systems). The Python ecosystem especially suffers from this.

-

Here I am talking about each process having its own file system namespace, and parent processes mounting paths to child processes. There are other (sometimes cool, sometimes rather wacky) ideas in Plan 9 but I believe this to be one of the most fundamentally useful. ↩︎

-

Bazel, when using the system toolchain, auto-detects system paths and automatically sets up dependencies against libraries and headers (and limits the paths that targets are allowed to include from), but it’s still super messy and can cause confusing build failures. ↩︎

-

“Well, just stick to POSIX shell and coreutils!” The POSIX-compliant versions of these tools frequently exclude very useful features that extended versions provide. I believe that if my build system is capable enough to describe build-time tool dependencies and how to obtain them, I should not restrict myself to the lowest common denominator. Furthermore, the Windows issue still applies. ↩︎

-

I’m aware that it’s possible with dynamic derivations to build things granularly, but this still appears to be in its infancy, and all the other DX problems still apply. ↩︎

-

“Proper toolchain setup” here means using something like

hermetic_cc_toolchainortoolchains_llvm_bootstrapped. The former in particular useszig cc, which has built-in support for compiling against a specificglibcversion without requiring a sysroot, which is ideal for building portable software on Linux that still requires a dynamically-linked libc for some reason.Of course, this is just for C/C++. Other language toolchains have their own ways to set up toolchains, but it’s usually just a matter of requesting it using a module extension, and ruleset documentation is adequate for setting this up. The hairiest one I know outside of C/C++ is the Java setup, which requires

rules_javafor the actual binary/library targets and toolchains,contrib_rules_jvmfor Gazelle, andrules_jvm_externalfor fetching dependencies from Maven repositories. ↩︎ -

In particular:

- Unless explicitly disabled with an environment variable, the C/C++ toolchain is automatically configured from the host system by default, breaking hermeticity.

- Even with [

--incompatible_strict_action_env], thePATHenvironment variable exposed to actions is/usr/bin:/binwhich means a reliance on tools installed on the system. These are not a limited set of tools like coreutils, but rather the full set of commands installed on the system. - Due to the above, the sandboxed build still has (read-only) access to the rest of the file system, which makes it possible for actions to pick up ambient dependencies.

-

This is becuse for many operating systems, libc is the stable syscall ABI, and to prevent recompilation for every kernel version you want to dynamically link libc. Linux is kind of an outlier in this regard. ↩︎

-

Unfortunately, Bazel doesn’t provide much help in this regard, and some manual assembly is required if you need this. ↩︎